Decoding Science 006: Nobel Laureates and AI, Materials for Superconducting Qubits, Bottlenecks of Artificial Research Engineers and Unstable Singularities

Welcome to Decoding Science: every other week our writing collective highlight notable news—from the latest scientific papers to the latest funding rounds in AI for Science —and everything in between. All in one place.

What we read

Thoughts on The Curve [Nathan Lambert, Interconnects, October 2025]

Lambert puts together some notes following a conversation at The Curve, an AI conference hosted earlier this month by the Golden Gate Institute. The first half of the article is of particular interest here because it attempts to justify certain timelines on AI for Science.

Here, I specifically want to focus on his argument for how the “AI Research Engineer” role resists clean automation timelines. Per Lambert, automated RE’s (agents that can see through the whole sequence of the research methodology) are feasible within the next few years with some caveats. He argues that RE is a broad category and its components will fragment at variable rates. Some ideas will be automated quicker, especially with more computing power, while others will see a narrowing but present bottleneck where human insight is still necessary – but “to check the box of automation, the entire role needs to be replaced.”

Beyond technical capabilities, Lambert also identifies how different modes of scientific discovery resist automation asymmetrically. Cross-pollinating existing fields would be much easier for agents than having truly transformative breakthroughs: “being immersed in the state of the art and having a brilliant insight that makes anywhere from a ripple causing small performance gain to a tsunami reshaping the field.” Moreover, the marketplace of ideas where researchers convince colleagues to pursue specific directions will maintain its human core even as everyone gains “superpowers on making evidence to support their claims.”

Lambert’s final point on the friction inherent with autonomous AI REs is based on the “inevitable curse of complexity” where each advance in AI systems needs more and more elaborate infrastructure, tooling, and wrappers. He is of the opinion that this will create a sort of drag that ensures progress “will feel much more linear rather than exponential” going forward.

What we read

Active-Learning Inspired Ab Initio Theory-Experiment Loop Approach for Management of Material Defects: Application to Superconducting Qubits [Chaudhari et al., arXiv, October 2025]

Superconducting quantum interference devices (SQUIDs) were first introduced in 1985 with the discovery that macroscopic tunneling effects could occur between two layers of superconductive material separated by a thin insulating barrier [1]. As John Clarke, Michael Devoret, and John Martinis demonstrated, electron pairs were able to move coherently - and without resistance - through the material lattice: this is the foundation of superconductivity. Yet what differentiated this sandwiched structure from other materials was its assembly into a quantum device. In creating the first Josephson junctions (JJs) setup, they allowed electron pairs to tunnel through the insulating layer - hopping from one superconducting material layer to the other - given sufficient current was applied. Being able to control electrons in such confined environments was the first step to translating theoretical principles of quantum computing to the real world.

Since then, innovations in cryogenic control, material layering combinations, and algorithmic organisation have incrementally moved the field forward - albeit at cryogenic speeds, one might note. But why has progress been slow: why do we still carry around silicon chips as opposed to qubit-based devices in our pockets and lives? Because the biggest bottleneck remains materials selection. Overcoming the challenges of decoherence and noise is critical to enabling the stable differentiation between states - effectively superimposing a computable state.

To this end, the recent active-learning framework proposed by Chaudhari et al. provides a unique approach to find new material combinations. In the paper, they combine density functional theorem (DFT) computational predictions and a trained logistic regression model with empirical discovery. This ‘Material Genome Loop’-inspired setup was used to identify new materials to interface with niobium. Given the use of a logistic regression model, the input was equated to a set of continuous-value descriptors, and the output was computed as a probability score rounded to a binary predicted value. Descriptors used were broken down into 1. defect formation energies calculated with DFT, and 2. thermodynamic descriptors (fitting parameters). Thermodynamic descriptors, in particular, were computationally shown to be the dominant effect in determing whether an interface would oxidise or not. Thinking of an oxygen atom as being in a simulated game, the different ‘moves’ an oxygen atom could make were: i. moving from the candidate metal into (a) the niobium layer, (b) a niobium oxide cluster, or ii. moving from a metal oxide into (a) an interstitial site in the lattice. The latter effectively freed the oxygen atom to migrate through grain boundaries in the material and oxidise the interface.

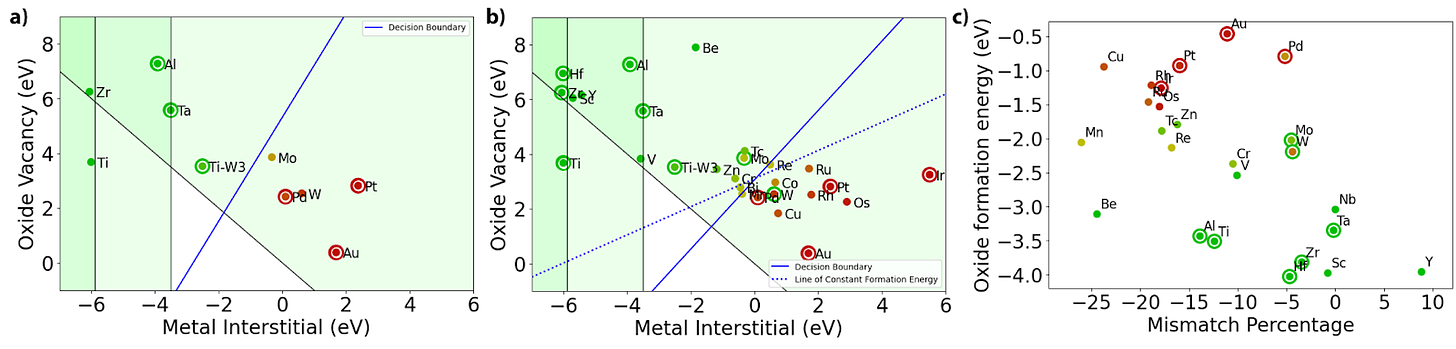

Yet what strengthened the paper setup and results were the tight integration of theoretical predictions with experimentally validated results. As shown in figure 1, predicted probabilities of forming an oxide (inner circle color) were compared to experimentally observed oxide formation (outer circle color). The active-learning framework of taking model-recommended candidate materials → testing these experimentally → using results to further train the model allowed narrowing the search scope and identifying top candidates. Combined with materials expertise of selecting a crystal structure to match that of niobium, the authors were able to identify top Josephson Junction material candidates to prevent oxide formation.

Figure 1: Energy to move into a metal interstitial compared to oxygen vacancy site. (a and b) Green regions indicated that an oxygen migration pathway was inhibited. Overlap in regions indicated more than one region was inhibited; the darker the green tint the more pathways were inhibited. From this coloring scheme, the top left-hand corner emerges as most promising to prevent oxide formation. Red and green circles outline reflected if Nb oxide formation was suppressed (green) or not (red) experimentally. The inner circle color plotted the probability of forming an oxide as predicted by the logistic regression model. (c) Lattice mismatch % between each candidate material and the BCC niobium crystal structure, plotted against oxide formation energy.

So why does it matter that zirconium (Zr) was ranked best, followed by tantalum (Ta), hafnium (Hf), and scandium (Sc)? Because to date, most superconductive qubit devices continue using aluminium (Al) as the default material for the construction of Josephson Junctions. However, as demonstrated by Chaudhari et al. using this active-learning framework, aluminium does not emerge as the best candidate to match niobium qubits. In light of lattice mismatch and predicted oxide formation energy per oxygen atom, rational design strategies could be used to re-evaluate material choices in SQUIDs and uncover new superconductor/insulator combinations that move the field forward, faster, one tunneling step at a time.

Discovering new solutions to century-old problems in fluid dynamics [Wang et al., DeepMind, September 2025]

A major unresolved problem in physics is whether certain fluid dynamics equations (e.g. 3D Euler and Navier-Stokes equations) can develop singularities; these are points in time and space where some quantity, like velocity or pressure, becomes infinite. These help mathematicians identify limitations to these equations and help us improve our understanding of fluid dynamics. In fact, the problem of finding a singularity in the Navier-Stokes equations is so notorious that it is one of the six Millennium Prize Problems, which are still unresolved. Unstable singularities (those that any slight perturbation might destroy them) are likely the ones that exist in these two equations.

The team at DeepMind used a physics-informed neural network and a framework that pushes the PINN to near-machine precision to discover a new family of unstable singularities in several fluid equations. They also observed that the speed of the blow up (the process of the property tending to infinity) and how unstable the solution is, are correlated in two of the equations.

Nobel 2025 Laureates: Current Activities in AI - HC

This year’s Nobel announcements honored foundational science rather than AI methods. In Medicine, Mary E. Brunkow, Fred Ramsdell, and Shimon Sakaguchi were recognized for discoveries establishing peripheral immune tolerance and regulatory T cells (FOXP3). In Physics, John Clarke, Michel H. Devoret, and John M. Martinis were cited for experiments showing macroscopic quantum behavior in superconducting circuits—work that underpins today’s quantum technologies. In Chemistry, Susumu Kitagawa, Richard Robson, and Omar M. Yaghi were honored “for the development of metal-organic frameworks (MOFs),” porous crystalline materials whose tunable architectures enable applications from gas storage and separations to catalysis.

Sakaguchi’s recent work intersects with AI/ML mainly via single-cell immunology. A 2024 CD4⁺ T-cell atlas uses high-dimensional single-cell data and presents a machine-learning framework to predict autoimmune states from T-cell profiles (Cell Genomics 2024). While the Nobel prize itself is for foundational immunology, this line illustrates how his community now leverages ML for disease stratification and mechanism discovery.

Devoret and Martinis connect to AI through quantum error correction (QEC), where learning-based control/decoding is increasingly standard. Devoret’s group published a 2025 Nature paper demonstrating qudit QEC beyond break-even, explicitly optimized with a reinforcement-learning agent - a direct AI application to quantum hardware. Martinis’ former Google Quantum AI program advanced neural-network/transformer decoders for the surface code on Sycamore-class processors, showing state-of-the-art learned decoding on experimental data (Nature 2024). Together, these works exemplify AI as an enabling tool for quantum control and scalability, even though the Physics Nobel recognizes earlier foundational superconducting-circuit breakthroughs.

Omar M. Yaghi is actively weaving AI into reticular chemistry: his team co-led MOFGen, an “agentic AI” pipeline that generated hundreds of thousands of candidate MOFs and guided the successful synthesis of several “AI-dreamt” frameworks, demonstrating end-to-end AI-assisted materials discovery (arXiv 2025). His group has also advocated for—and helped launch—infrastructure to mainstream these tools, notably Berkeley’s Bakar Institute of Digital Materials for the Planet (BIDMaP), where recent pieces outline how generative AI can streamline lab workflows in reticular chemistry. Earlier group work used ChatGPT for literature mining of MOF synthesis conditions and for building predictive synthesis models, foreshadowing today’s agentic systems (JACS 2023).

Notable Deals:

Periodic Labs emerges out of stealth with a $300 million seed round led by a16z to build an AI scientist that accelerates discoveries in physics, chemistry and other fields.

Axiom Math, founded by a former Meta researcher, has raised $64 million in its seed round to build an AI that can generate and solve complex mathematical problems.

Did we miss anything? Would you like to contribute to Decoding Science by writing a guest post? Drop us a note here or chat with us on Twitter: @pablolubroth @ameekapadia